Detailed Case Exposition

Executive Summary (TL;DR)

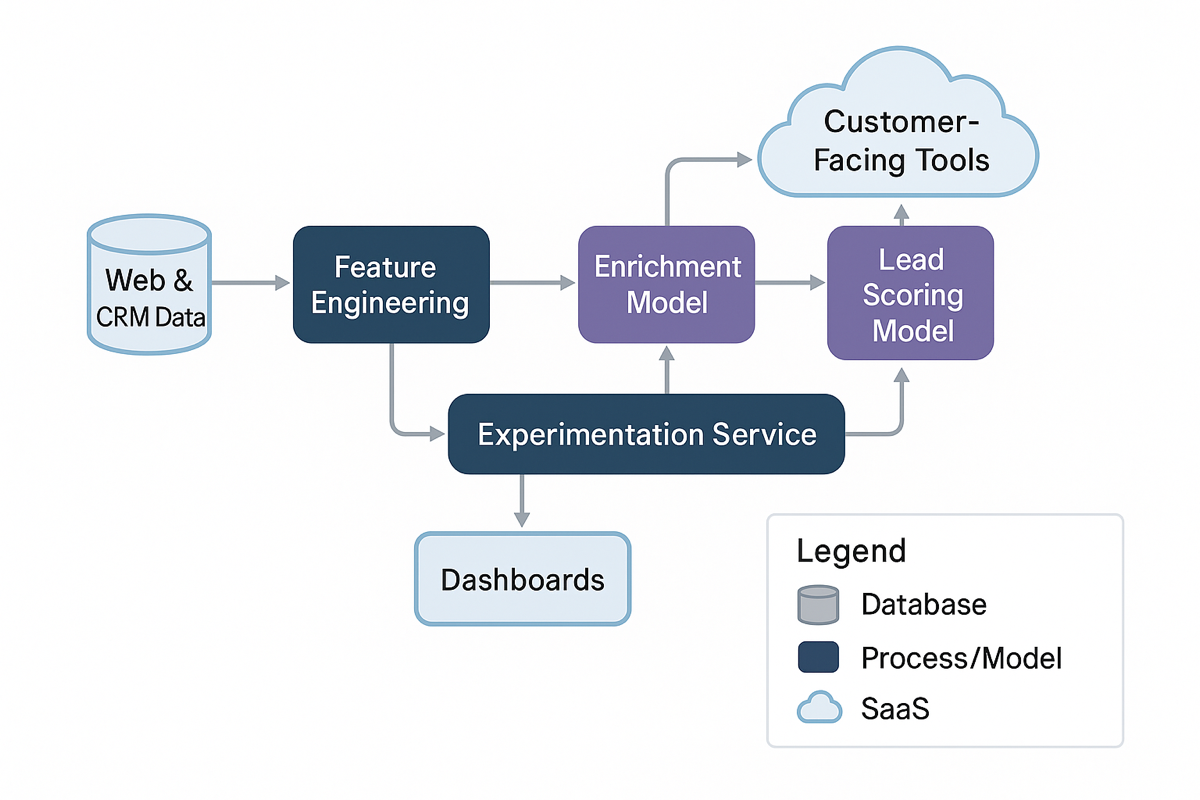

A production-grade AI framework that enriches, scores, and experiments on millions of leads weekly—boosting LinkedIn sales revenue by 10 % and cutting product-test insight cycles from weeks to days.

Business Outcome & Strategic Leverage

The framework converted LinkedIn’s data exhaust into monetisable signals and a repeatable testbed, positioning Sales Navigator and marketing tools as data-driven differentiators and accelerating revenue growth ahead of strategic launches.

1 · Strategic Context & Market Friction

- Exploding prospect pool overwhelmed manual lead triage.

- Feature releases relied on slow, spreadsheet-driven AB tests.

- Trust gaps hindered adoption of early ML prototypes.

2 · Objectives & Delivery Constraints

- Mandate: Ship enrichment, scoring, and testing engine in < 6 months.

- Constraints: Ten-person pod, Hadoop/Hive stack, must embed in existing sales workflows.

- Trade-offs: Choose interpretable tree models over deeper nets to earn stakeholder trust.

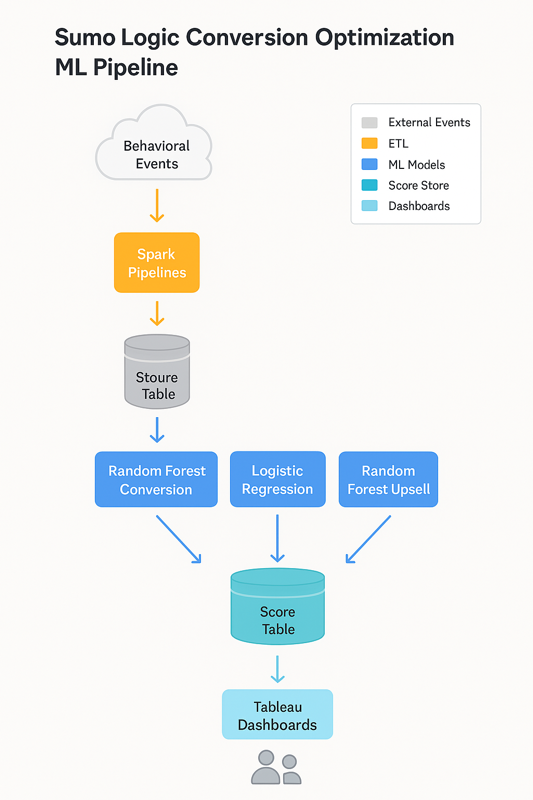

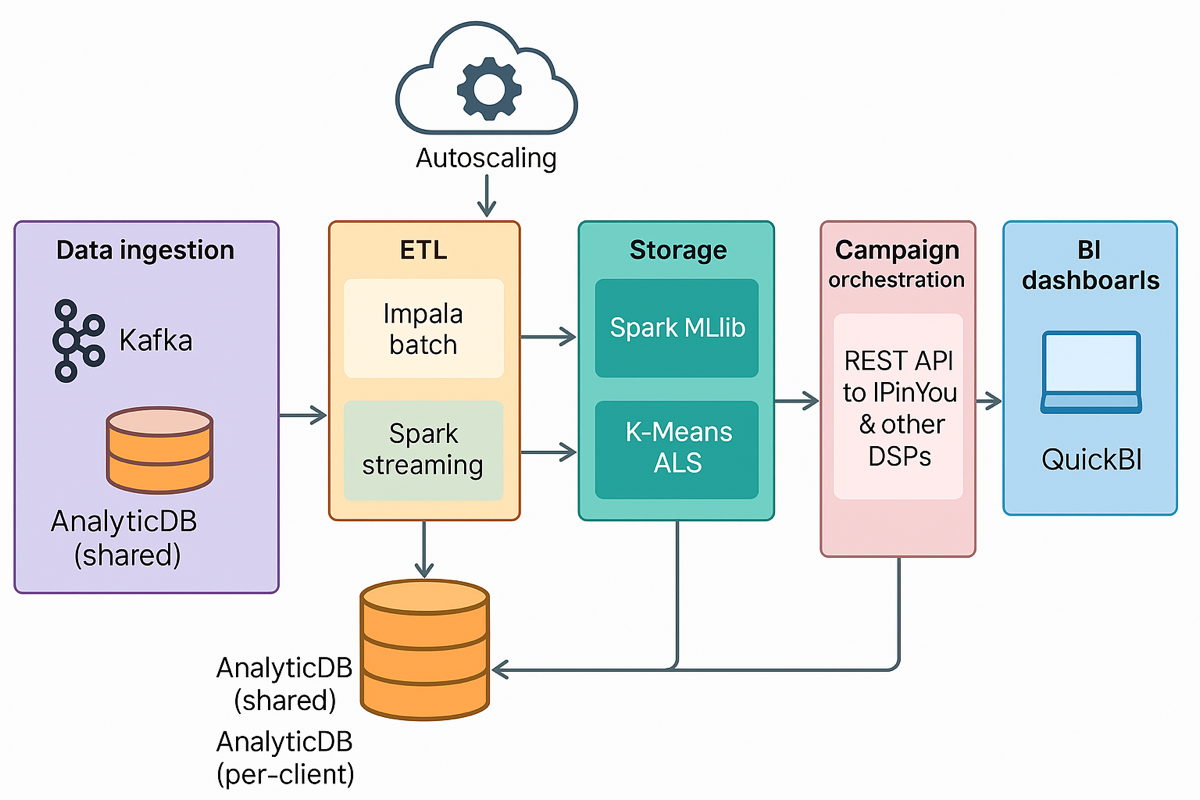

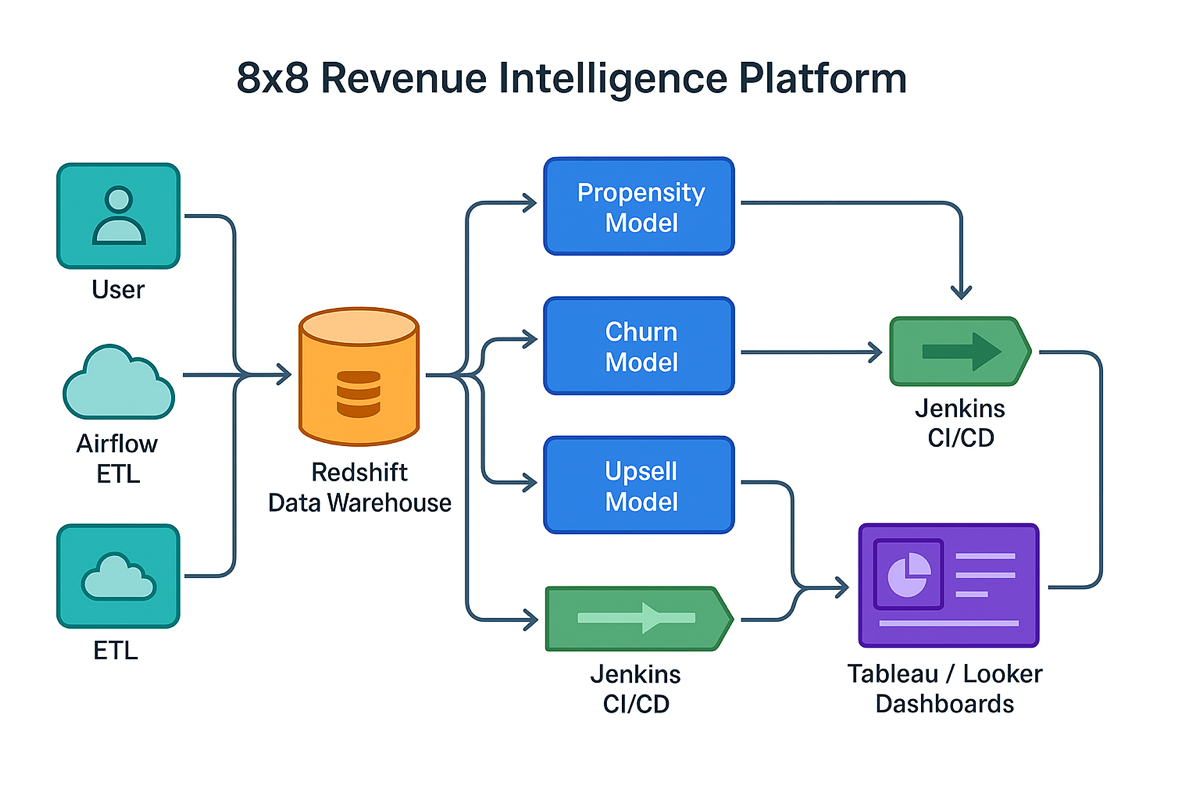

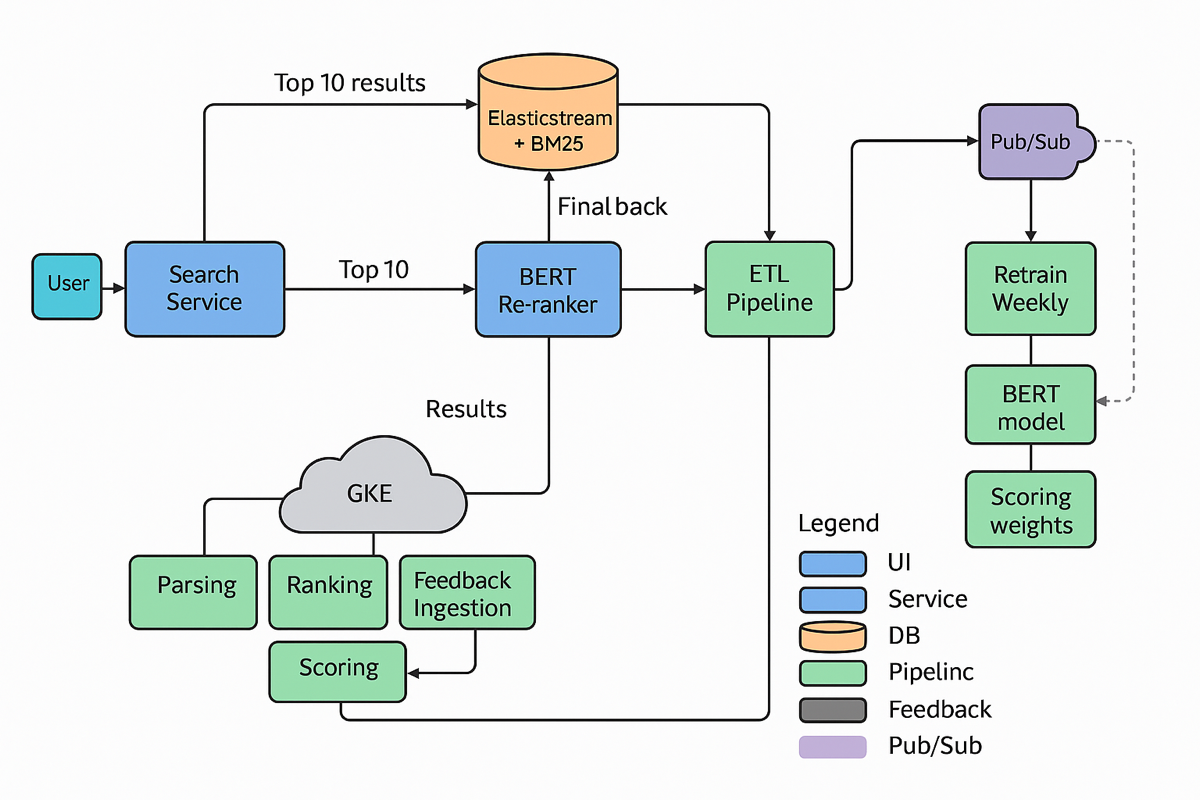

3 · Technical Architecture & Infrastructure Decisions

| Layer | Decision | Rationale |

| Feature Engineering | Hadoop + Hive | Scales to multi-TB user signals |

| Enrichment Model | Logistic Regression | Fast, transparent web-signal fusion |

| Lead-Score Model | Random Forest | High accuracy & feature importance |

| Experimentation | Custom AB/MVT engine | Supports ML-variant switches & holdouts |

| Deployment | Nightly batch refresh; real-time API overlays | Minimal latency for Sales tools |

| Dashboards | Internal insight UI | Live lift, confidence, driver visuals |

Security & PII governance embedded via internal policy enforcement libraries.

4 · Implementation & System Workflows

- Hive jobs aggregate CRM, web, and in-product activity.

- Models score leads & accounts nightly; results land in a shared warehouse.

- Experimentation service routes user buckets, toggles model variants, and logs outcomes.

- Dashboards visualise lift, p-values, and feature drivers for PMs and GTM leads.

5 · User Experience & Product Storytelling

Sales reps open Navigator to pre-ranked accounts; marketers A/B creative with ML-powered segments; PMs see real-time experiment boards to decide rollout.

6 · Performance Outcomes & Measurable Impact

| KPI | Pre-Framework | Post-Framework |

| Sales revenue from AI-targeted leads | Baseline | +10 % |

| Time-to-insight for product tests | Weeks | Days |

| Experiment coverage of rollouts | < 30 % | > 80 % |

7 · Adoption & Market Strategy

Early pilots with 100 sellers grew to 10 000+ global users. Transparent dashboards and feature-importance views cemented trust, making AI scores a staple metric in pipeline reviews.

8 · Feedback-Driven Evolution

Experiment telemetry revealed score drift in emerging markets; rapid re-training restored precision. Multivariate tests on threshold tuning unlocked further conversion lift without code changes.