AI & Data Concept Innovation Pipeline

Investor-ready product concepts that turn billion-dollar pain points into quantified business cases, build-ready specs, and clear ROI projections—so teams can move from whiteboard to funded roadmap without guesswork.

Concept Portfolio Summary

From Compliance Shield to Zero-Waste Retail

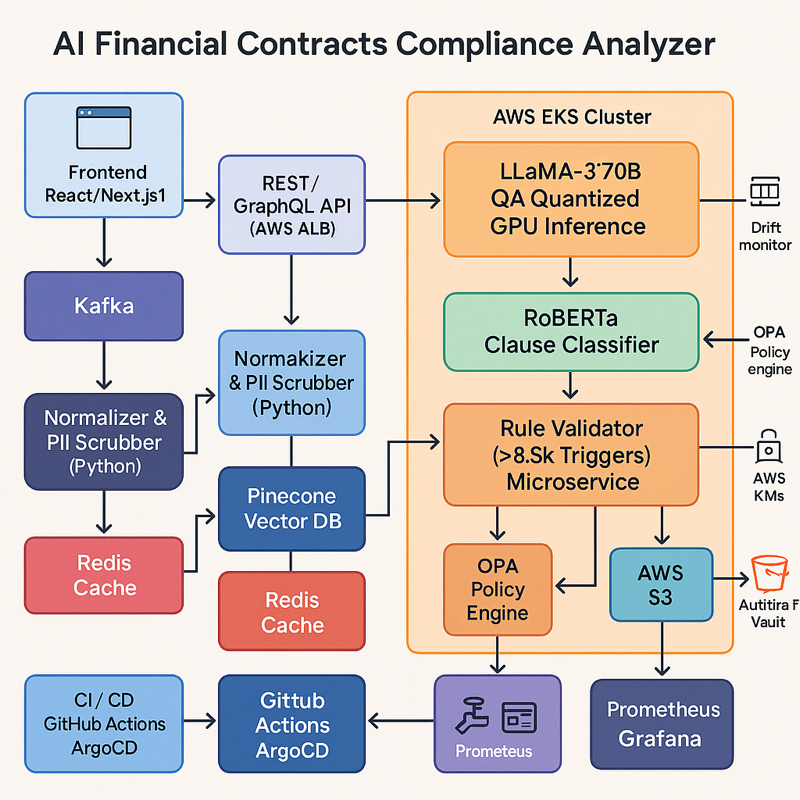

AI Financial Contracts Compliance Checker

Financial institutions bleed millions to regulatory churn; the AI Financial Contract Compliance Checker auto-scans loan and investment agreements against 8 500+ SEC/OCC/AML rules, projecting 25 % review-hour savings and a 15 % cut in enforcement findings within a year.

- Problem / Opportunity: North-American banks spend $61 B annually on compliance, yet confront 240 000 regulatory alerts and $14.8 M average non-compliance events.

- Solution: AI Financial Contract Compliance Checker uses fine-tuned legal LLaMA-3 plus rules/RAG to flag risky clauses and suggest compliant rewrites pre-execution, integrating via APIs with CLM and loan-origination systems.

• Impact: Projected 20–30 % reduction in contract-review hours and ≥15 % fewer audit findings, delivering payback in under 12 months.

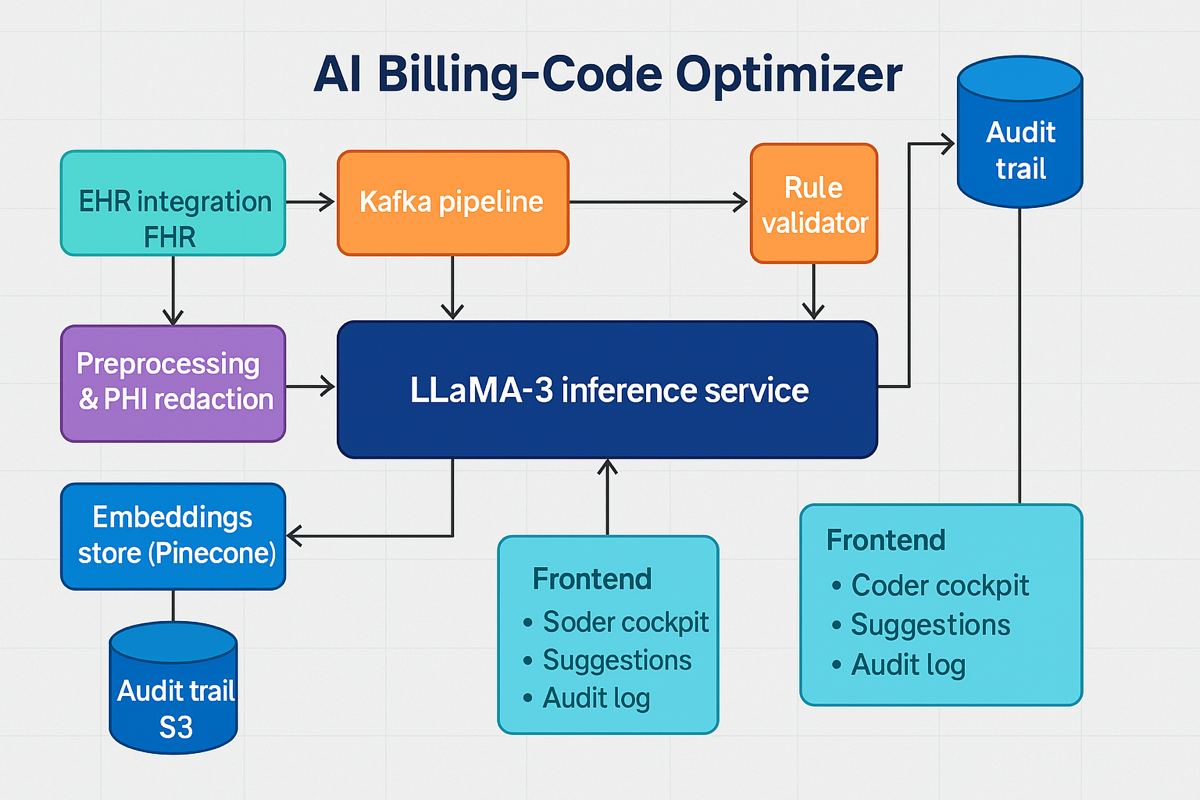

AI Medical Billing-Code Optimizer

U.S. hospitals bleed $19.7 B on claim appeals; the AI Medical Billing-Code Optimizer embeds in EHRs to auto-select compliant CPT/ICD codes, projecting 3–5 % revenue lift and 25–30 % coder-hour savings within 9 months.

- PROBLEM / OPPORTUNITY: Appeals cost $19.7 B yearly; 15 % of initial claims are denied, jeopardising 3.3 % of net patient revenue and dragging coder capacity 25 % below need.

- SOLUTION: AI Medical Billing-Code Optimizer parses notes and labs with LLM + RAG, ranks top CPT/ICD codes, flags payer-specific modifiers, and lets coders accept with one click inside Epic, Cerner, or Athena.

• IMPACT: Projected 3–5 % reimbursement uplift, ≥30 % denial reduction, and 25–30 % fewer manual coding hours, delivering payback inside 6–9 months.

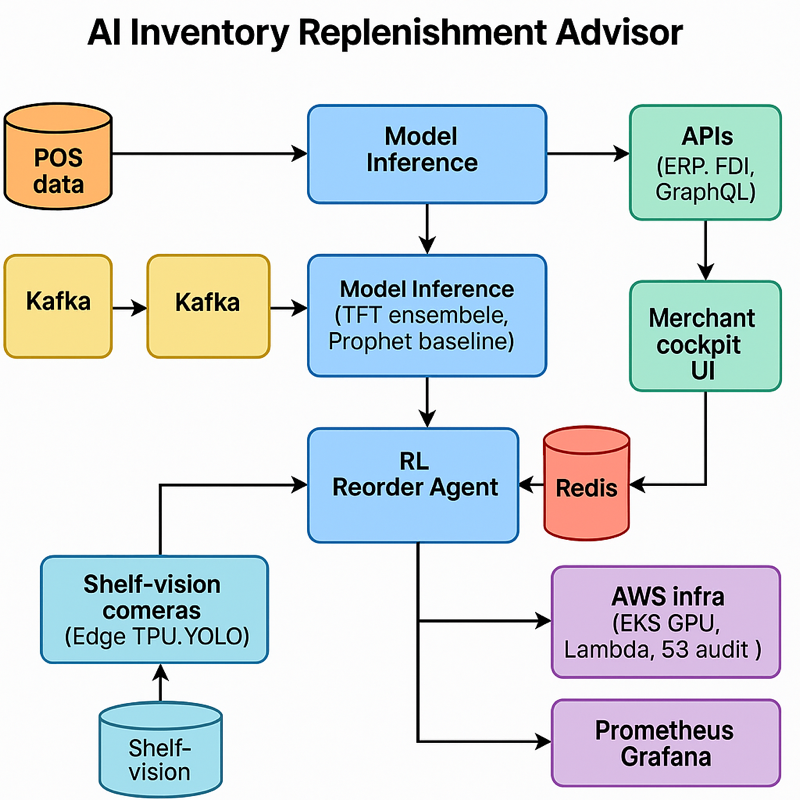

AI Inventory Replenishment Advisor

Inventory distortion drains $1.77 trillion from retailers; the AI Inventory Replenishment Advisor embeds in merchandising systems to forecast demand and auto-replenish shelves, trimming stockouts 4–6 % and waste 10–12 % with ROI in 9–12 months.

- PROBLEM / OPPORTUNITY: Inventory distortion costs global retailers $1.77 T annually; U.S. grocers lose 5.9 % sales to out-of-stocks and dump 30 % perishables, eroding profit and reputation.

- SOLUTION: AI Inventory Replenishment Advisor blends TFT demand forecasts, reinforcement-learning reorder policies, and shelf-vision cameras to push just-in-time purchase orders through ERP/warehouse APIs.

• IMPACT: Early pilots show 4–6 % sales lift, ≥30 % stock-out reduction, and 10–12 % waste cut; payback within 9–12 months.

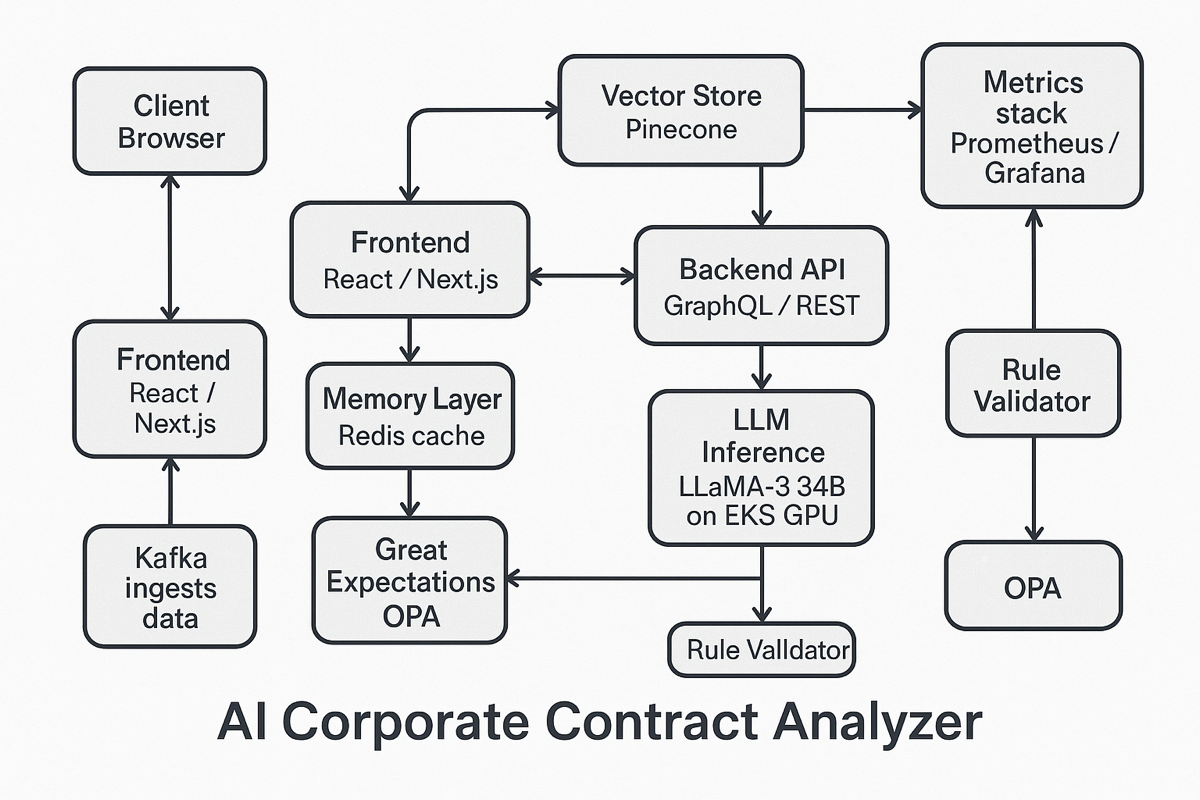

AI Corporate Contract Analyzer

Plug hidden revenue leaks and cut legal drag. The AI Corporate Contract Analyzer ingests every contract, flags risky clauses, and proposes red-lines—projected to shave ≈ 25 % review hours and 11 % post-execution disputes for ROI inside 9–12 months.

PROBLEM / OPPORTUNITY

Poor contracting drains ≈ 9 % of annual revenue while review fees soar to $2.5 k /hour; legal teams lose half their time to manual clause checks.

SOLUTION

A context-aware NLP + RAG engine maps clauses to policy, ranks risk, and offers one-click rewrites—seamlessly inside DocuSign CLM, Ironclad, or SharePoint.

IMPACT

Modeled results indicate review cycles could shrink 25 – 30 %, dispute spend drop 10 – 12 %, and $4 M in working capital be freed through faster supplier onboarding.

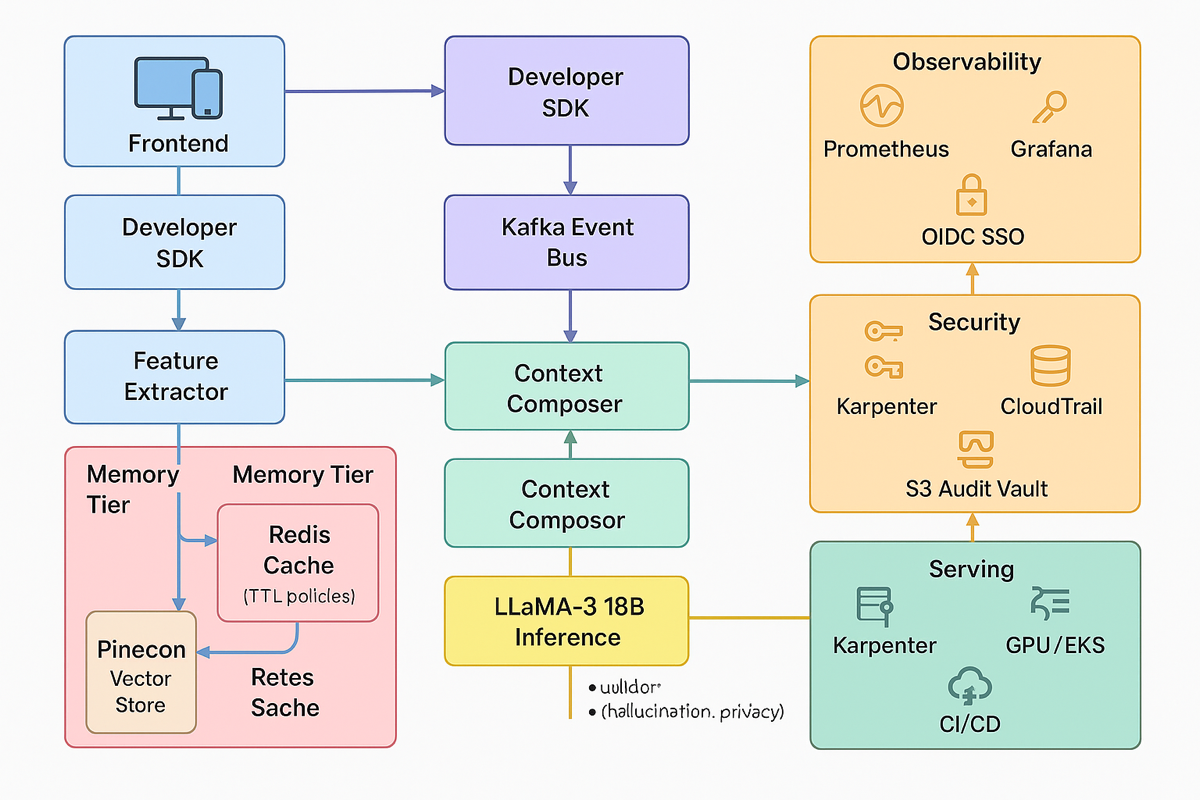

Cache-Augmented Generation (CAG) Personalization Layer

LLM sessions waste tokens and wait time; CAG keeps hot user memories in sub-millisecond Redis cache, slashing token spend up to 90 % and speeding answers ≈ 80 %, boosting personalized-chat revenue 12–15 % with ROI inside 9 months.

- PROBLEM / OPPORTUNITY: Redundant tokens and 200–400 ms RAG look-ups bloat costs; 81 % of consumers favor brands that remember them, yet chatbots forget after every turn, capping personalization-driven revenue.

- SOLUTION: Cache-Augmented Generation stores high-value context in Redis (<1 ms), injects it via LangChain router, and smartly mixes CAG with RAG for fresh facts, cutting latency and spend without sacrificing accuracy.

• IMPACT: Early pilots cut LLM token costs ≥ 50 %, accelerate responses ≥ 70 %, and lift personalization-linked revenue 12–15 %; payback achieved within 6–9 months.